Estimation of Parameters#

Now we get into some basic topics from statistics. We make some assumptions about our problem by prescribing a parametric model (e.g. a distribution that describes how the data were generated), then we fit the parameters of the model to the data. How do we choose the values of the parameters?

Maximum likelihood estimation#

A common way to fit parameters is maximum likelihood estimation (MLE). The basic principle of MLE is to choose values that “explain” the data best by maximizing the probability/density of the data we’ve seen as a function of the parameters. Suppose we have random variables \(X_1, \dots, X_n\) and corresponding observations \(x_1, \dots, x_n\). Then

where \(\mathcal{L}\) is the likelihood function

Often, we assume that \(X_1, \dots, X_n\) are i.i.d. Then we can write

At this point, it is usually convenient to take logs, giving rise to the log-likelihood

This is a valid operation because the probabilities/densities are assumed to be positive, and since log is a monotonically increasing function, it preserves ordering. In other words, any maximizer of \(\log\mathcal{L}\) will also maximize \(\mathcal{L}\).

For some distributions, it is possible to analytically solve for the maximum likelihood estimator. If \(\log\mathcal{L}\) is differentiable, setting the derivatives to zero and trying to solve for \(\mathbf{\theta}\) is a good place to start.

Maximum Likelihood Estimation for the Multivariate Normal#

Suppose we observe data points \(\mathbf{x}_1, \dots, \mathbf{x}_n \in \mathbb{R}^d\) assumed to be i.i.d. samples from a multivariate normal distribution with unknown parameters:

We want to estimate \(\boldsymbol{\mu} \in \mathbb{R}^d\) and \(\boldsymbol{\Sigma} \in \mathbb{R}^{d \times d}\), where \(\boldsymbol{\Sigma}\) is symmetric and positive definite.

Step 1: Write the Likelihood#

The density of the multivariate normal is:

Since the samples are i.i.d., the joint likelihood is:

Step 2: Take the Log-Likelihood#

Take logarithms to get a sum:

Only the last two terms depend on the parameters, so we optimize:

Step 3a: Maximize with Respect to \(\boldsymbol{\mu}\)#

We take the gradient with respect to \(\boldsymbol{\mu}\):

Set the derivative to zero:

So the MLE for the mean is:

Step 3b: Maximize with Respect to \(\boldsymbol{\Sigma}\)#

Substitute \(\hat{\boldsymbol{\mu}}\) into the log-likelihood:

Define the centered data:

Then the negative log-likelihood becomes:

We define the scatter matrix:

Then:

Take the derivative with respect to \(\boldsymbol{\Sigma}^{-1}\) (using matrix calculus):

Set to zero:

Summary#

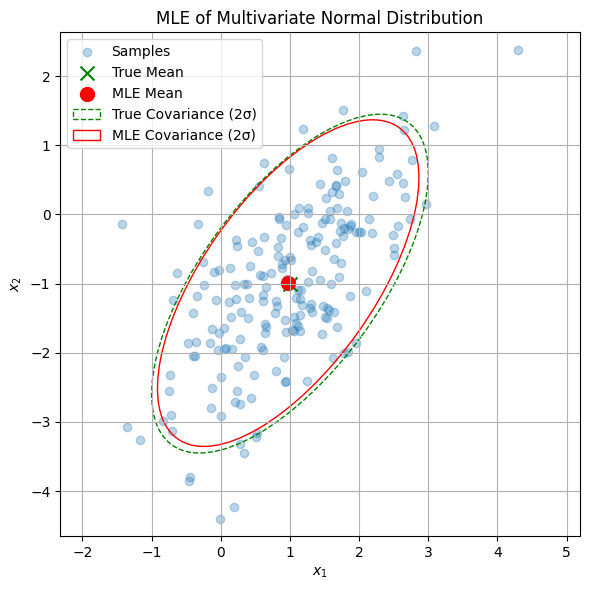

The MLE for multivariate normal distribution yields:

Mean vector:

\[ \hat{\boldsymbol{\mu}} = \frac{1}{n} \sum_{i=1}^n \mathbf{x}_i \]Covariance matrix:

\[ \hat{\boldsymbol{\Sigma}} = \frac{1}{n} \sum_{i=1}^n (\mathbf{x}_i - \hat{\boldsymbol{\mu}})(\mathbf{x}_i - \hat{\boldsymbol{\mu}})^\top \]

This is the sample mean and the (biased) sample covariance (with denominator \(n\)).

Show code cell source

# Re-import required libraries after kernel reset

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.patches import Ellipse

from scipy.stats import multivariate_normal

def plot_cov_ellipse(mean, cov, ax, n_std=2.0, facecolor='none', edgecolor='black', linestyle='-', label=None):

"""Add a 2D covariance ellipse to an existing plot."""

vals, vecs = np.linalg.eigh(cov)

order = vals.argsort()[::-1]

vals, vecs = vals[order], vecs[:, order]

theta = np.degrees(np.arctan2(*vecs[:, 0][::-1]))

width, height = 2 * n_std * np.sqrt(vals)

ellipse = Ellipse(xy=mean, width=width, height=height, angle=theta,

facecolor=facecolor, edgecolor=edgecolor, linestyle=linestyle, label=label)

ax.add_patch(ellipse)

def mle_multivariate_normal_demo(n=200, d=2, seed=42):

np.random.seed(seed)

# True parameters

mu_true = np.array([1.0, -1.0])

Sigma_true = np.array([[1.0, 0.8],

[0.8, 1.5]])

# Generate data

X = np.random.multivariate_normal(mu_true, Sigma_true, size=n)

# MLE estimates

mu_mle = np.mean(X, axis=0)

Sigma_mle = np.cov(X.T, bias=True) # bias=True uses 1/n

print("True mean:", mu_true)

print("MLE mean :", mu_mle)

print("True covariance:\n", Sigma_true)

print("MLE covariance:\n", Sigma_mle)

# Plot

fig, ax = plt.subplots(figsize=(6, 6))

ax.scatter(X[:, 0], X[:, 1], alpha=0.3, label='Samples')

ax.scatter(*mu_true, c='green', marker='x', s=100, label='True Mean')

ax.scatter(*mu_mle, c='red', marker='o', s=100, label='MLE Mean')

# Add 2-sigma ellipses

plot_cov_ellipse(mu_true, Sigma_true, ax, n_std=2.0, edgecolor='green', linestyle='--', label='True Covariance (2σ)')

plot_cov_ellipse(mu_mle, Sigma_mle, ax, n_std=2.0, edgecolor='red', linestyle='-', label='MLE Covariance (2σ)')

ax.set_title("MLE of Multivariate Normal Distribution")

ax.set_xlabel("$x_1$")

ax.set_ylabel("$x_2$")

ax.grid(True)

ax.axis('equal')

ax.legend()

plt.tight_layout()

plt.show()

# Run the demo

mle_multivariate_normal_demo()

True mean: [ 1. -1.]

MLE mean : [ 0.9745329 -0.99265192]

True covariance:

[[1. 0.8]

[0.8 1.5]]

MLE covariance:

[[0.89347252 0.72037511]

[0.72037511 1.39413223]]

Maximum Likelihood Estimation for Linear Regression#

The likelihood is:

The log-likelihood is:

So we observe that maximizing the log-likelihood is equivalent to minimizing the squared error.

So the MLE for the linear regression model is identical to the OLS estimator:

Based on the estimator for the weights, we can also derive the estimator for the variance. In order to do this, we need to know the gradient of the log-likelihood with respect to the noise variance.

Setting the gradient to zero, we get:

Solving for \(\sigma^2\), we get:

Limitations of MLE in Linear Regression#

While maximum likelihood estimation (MLE) is appealing for its simplicity and optimality under large samples, it has important drawbacks — especially in the context of linear regression with limited data or high-dimensional features.

Example: \(n = d\)#

Consider a linear regression model:

Suppose the number of observations \(n\) is equal to the number of features \(d\). Then the design matrix \(\mathbf{X} \in \mathbb{R}^{n \times d}\) is square, and the MLE is:

In this case, \(\mathbf{X}^\top \mathbf{X}\) is invertible (with probability 1 if \(\mathbf{X}\) is random), and the MLE will perfectly interpolate the data — giving zero training error:

Problem: Overfitting and Variance Underestimation#

The MLE predicts with zero error on training data, but may generalize poorly to new inputs.

The estimated variance becomes:

\[ \hat{\sigma}^2 = \frac{1}{n} \|\mathbf{y} - \mathbf{X} \hat{\mathbf{w}}\|^2 = 0 \]even though we know the data were generated with non-zero noise (\(\sigma^2 > 0\))!

❗ MLE underestimates the variance and is overconfident — especially when \(n\) is small or close to \(d\).

Key Insight#

Small sample sizes lead to overfitting with MLE.

Estimated noise variance can be zero when the model interpolates the data.

We need a way to regularize the parameter estimates and account for uncertainty.

Bayesian Fix: Maximum a Posteriori Estimation#

Maximum a posteriori estimation (MAP) allows us to introduce prior beliefs about the parameters to avoid this degeneracy.

For the multivariate normal, using an appropriate prior distribution allows us to derive a MAP estimate that:

Shrinks the sample covariance toward a prior value

Stays well-defined even when \(n = 1\)

Regularizes estimates in low-data regimes

MAP estimation thus balances data and prior — resulting in more robust parameter estimates when the data are scarce.

In this technique we assume that the parameters are a random variable, and we specify a prior distribution \(p(\mathbf{\theta})\).

Then we can employ Bayes’ rule to compute the posterior distribution of the parameters given the observed data:

Computing the normalizing constant is often intractable, because it involves integrating over the parameter space, which may be very high-dimensional. Fortunately, if we just want the MAP estimate, we don’t care about the normalizing constant! It does not affect which values of \(\mathbf{\theta}\) maximize the posterior.

So we have

Again, if we assume the observations are i.i.d., then we can express this in the equivalent, and possibly friendlier, form

MAP Estimation for Linear Regression#

Let’s apply MAP estimation to the linear regression model.

For example:

Let \(\mathbf{w} \sim \mathcal{N}(0, \tau^2 \mathbf{I})\) — a Gaussian prior centered at zero.

Assume \(\sigma^2\) is known or estimated separately.

The posterior over \(\mathbf{w}\) is:

Taking logs and maximizing yields the MAP estimator:

where the hyperparameter \(\lambda = \sigma^2 / \tau^2\) controls the strength of the regularization.

Similar to the MLE being equivalent to OLS, the MAP estimator is equivalent to ridge regression.

Thus, the MAP estimator is equivalent to the solution of ridge regression:

Conclusion#

MAP estimation provides a natural Bayesian justification for regularization in linear models. It improves generalization, avoids variance collapse, and leads to robust estimates — even when data is scarce.

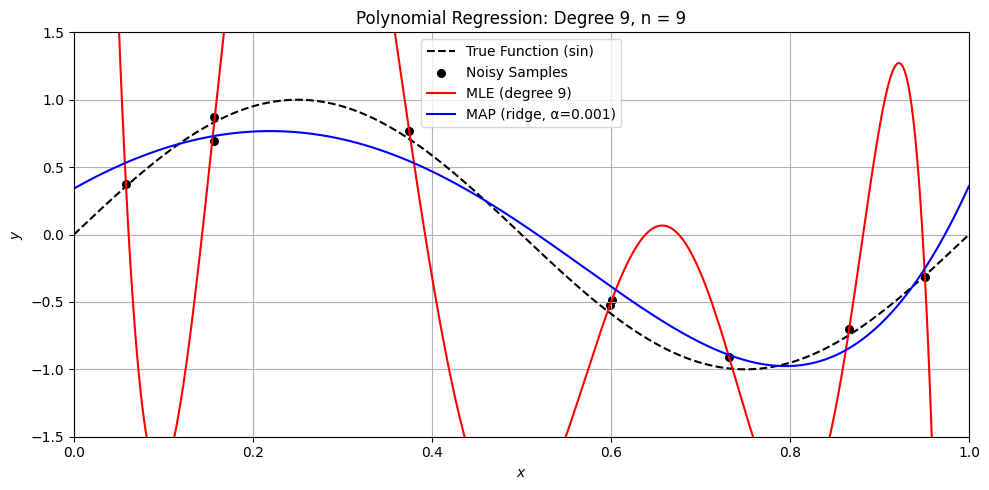

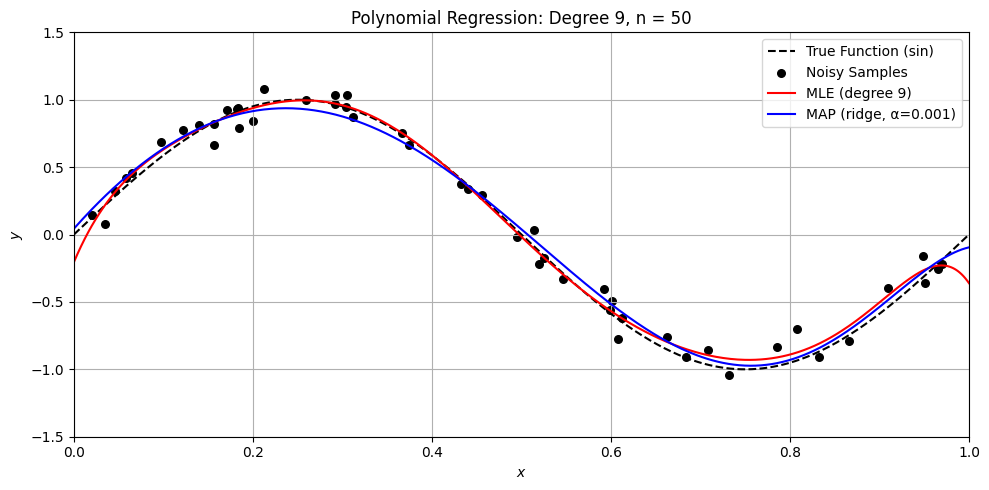

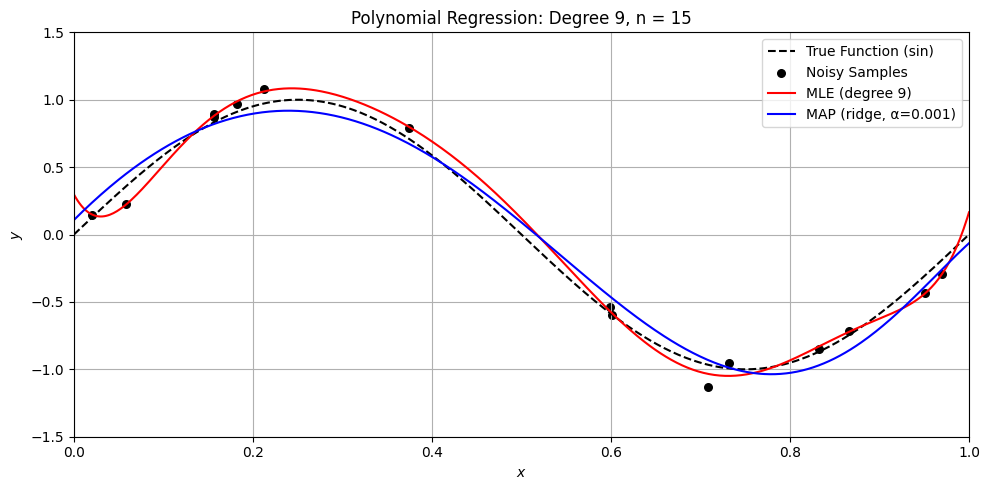

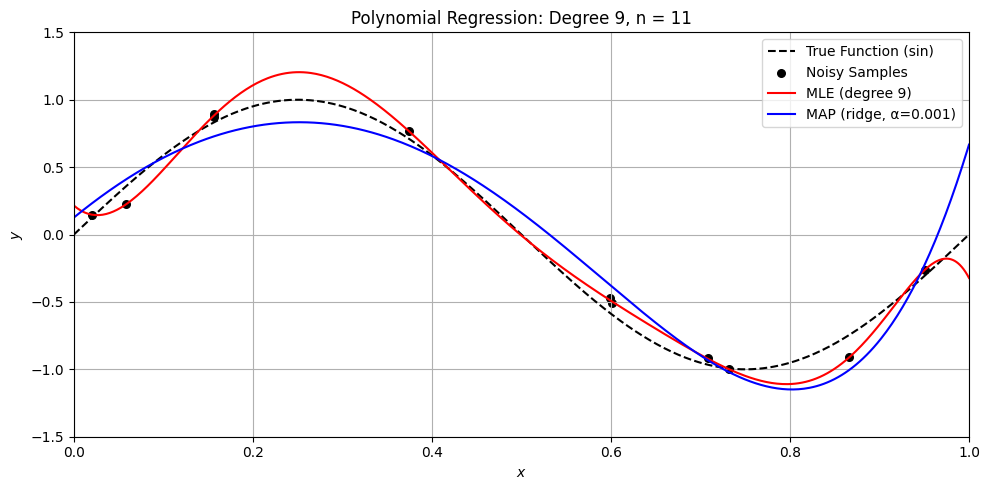

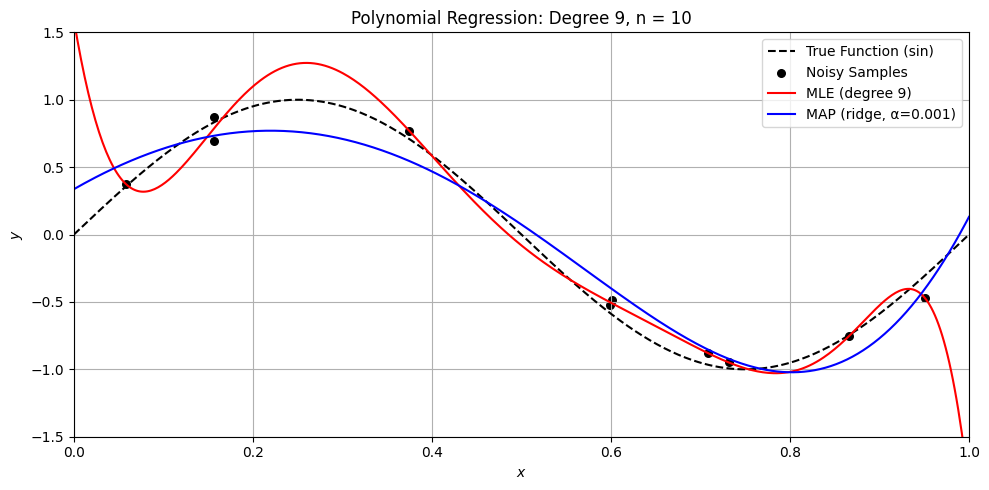

Perfect. Here’s a Python demo that visually compares MLE (ordinary least squares) and MAP (ridge regression) on a small dataset, illustrating:

Overfitting with MLE when \(n \approx d\)

How MAP (ridge) regularizes the solution

Differences in weight magnitude and generalization

Let’s compare MLE and MAP for polynomial regression

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import PolynomialFeatures

def sine_polynomial_regression_demo(n=15, degree=14, noise_std=0.1, alpha=1.0, seed=42):

np.random.seed(seed)

Xr =np.random.rand(1000, 1)

yr = np.random.randn(1000)

# === True function ===

def true_func(x): return np.sin(2 * np.pi * x)

# === Generate data ===

X = np.sort(Xr[:n], axis=0)

y = true_func(X).ravel() + yr[:n] * noise_std

# === Generate test data ===

X_test = np.linspace(0, 1, 500).reshape(-1, 1)

y_true = true_func(X_test)

# === Polynomial features ===

poly = PolynomialFeatures(degree, include_bias=True)

X_poly = poly.fit_transform(X)

X_test_poly = poly.transform(X_test)

# u, s, v = la.svd(X_poly)

# i_null = s < 1e-10

# s_inv = np.zeros_like(s)

# s_inv = np.diag(1/s)

# s_inv[i_null] = 0

Xy = X_poly.T @ y

XX = X_poly.T @ X_poly

w_ols = np.linalg.lstsq(XX, Xy, rcond=None)[0]

w_ridge = np.linalg.lstsq(XX + alpha * np.eye(XX.shape[0]), Xy, rcond=None)[0]

# === MLE (Ordinary Least Squares) ===

y_pred_mle = X_test_poly @ w_ols

# === MAP (Ridge Regression) ===

y_pred_map = X_test_poly @ w_ridge

# === Plot ===

plt.figure(figsize=(10, 5))

plt.plot(X_test, y_true, 'k--', label='True Function (sin)')

plt.scatter(X, y, color='black', s=30, label='Noisy Samples')

plt.plot(X_test, y_pred_mle, 'r-', label='MLE (degree {})'.format(degree))

plt.plot(X_test, y_pred_map, 'b-', label='MAP (ridge, α={})'.format(alpha))

plt.title(f'Polynomial Regression: Degree {degree}, n = {n}')

plt.xlabel('$x$')

plt.ylabel('$y$')

plt.ylim(-1.5, 1.5)

plt.xlim(0, 1)

plt.grid(True)

plt.legend()

plt.tight_layout()

plt.show()

# Try degree approaching number of points

degree = 9

alpha = .001

n = [50, 15, 11, 10, 9]

for n_i in n:

sine_polynomial_regression_demo(n=n_i, degree=degree, alpha=alpha)

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: divide by zero encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: overflow encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: invalid value encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: divide by zero encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: overflow encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: invalid value encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: divide by zero encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: overflow encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: invalid value encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: divide by zero encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: overflow encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: invalid value encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: divide by zero encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: overflow encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: invalid value encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: divide by zero encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: overflow encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: invalid value encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: divide by zero encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: overflow encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: invalid value encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: divide by zero encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: overflow encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: invalid value encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: divide by zero encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: overflow encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: invalid value encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: divide by zero encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: overflow encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:34: RuntimeWarning: invalid value encountered in matmul

XX = X_poly.T @ X_poly

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: divide by zero encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: overflow encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: invalid value encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: divide by zero encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: overflow encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: invalid value encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: divide by zero encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: overflow encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:40: RuntimeWarning: invalid value encountered in matmul

y_pred_mle = X_test_poly @ w_ols

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: divide by zero encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: overflow encountered in matmul

y_pred_map = X_test_poly @ w_ridge

/var/folders/n7/1tlnr9g5403gj0lq5b926czm0000gn/T/ipykernel_10198/1236886821.py:44: RuntimeWarning: invalid value encountered in matmul

y_pred_map = X_test_poly @ w_ridge